Ever wondered how much energy your cloud computing is using?

Jisc have, but it is not all that easy to get an answer. In an attempt to find out, Etsy created what they call their Cloud Jewels. These are estimated conversion factors that turn cloud compute consumption into watt-hours. Building on this, an open-source project called the Cloud Carbon Footprint (CCF) aims to provide “visibiity and tooling to measure, monitor and reduce your cloud carbon emissions”. CCF supports GCP, Azure and AWS and is “…open and extensible with the potential to add other cloud providers, on-premises or co-located data centres…”.

This blog outlines my thinking on how we could apply the Cloud Carbon Footprint to Jisc AWS accounts. My hope is that even while both Etsy and Cloud Carbon Footprint state their work is “experimental”, using these tools will at least allow us to get a baseline for energy use (regardless of the real numbers). We can then use that to measure trends towards lower energy usage. If you want to find out more, watch this talk by Dan Lewis-Toakley and organised by Green Tech South West.

Getting Started

CCF has two ways of obtaining resource consumption data from an AWS account – either polling the account through the AWS APIs or measuring resource consumption from a cost and usage report (CUR). CUR reports are created within an account and will only write to an S3 bucket in that account. Many of the Jisc AWS accounts are home to production services. At this early stage I did not want to impact the running of these accounts nor cause governance headaches asking for programmatic access. That left tracking usage through cost and usage reports.

CCF uses AWS standard CUR/Athena integration to query and plot energy usage. Athena is a neat serverless AWS service that allows you to query data in S3 using standard SQL. CUR reports are written to S3 buckets in the account. However, to get the CUR/Athena integration working I would again need access to the accounts. Athena adds some charges to the account (it is a pay-per-query model) too (albeit small). So what to do?

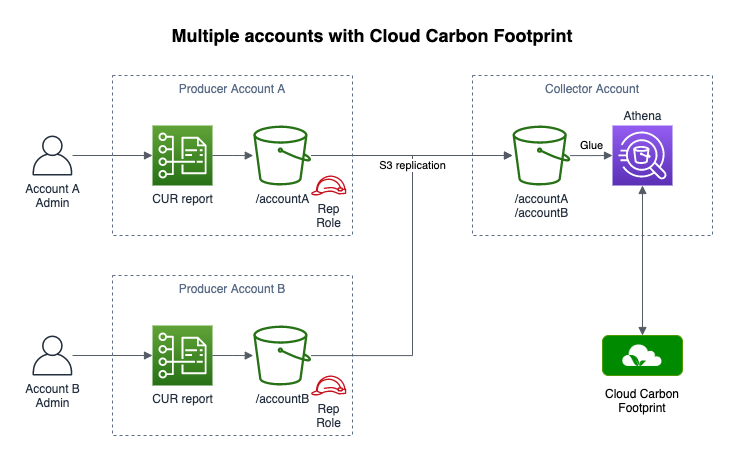

Finally we come to the plan. For each account we want to monitor, the administrator of that account creates an S3 bucket. Then in each account a cost and usage report is created (with Athena integration). Next S3 replication is used to copy the CUR data from the account into an account owned by Jisc Cloud Solutions (JCS). In this central logging account we setup the Athena integration and run CCF against this copy of the data. This approach has minimal impact on the monitored accounts.

It looks a bit like this:

You can find examples of the templates on my github account.

I wanted to make things easier for each account administrator, so created a CloudFormation template for them. This template creates an S3 bucket and a role and then sets up one-way S3 replication to the central bucket. The administrator then sends me the role ARN to allow it to replicate into the central bucket. Finally they create a cost and usage report (not currently supported by CloudFormation) and tick the Athena integration box. Then they can sit back and wait (the CUR data starts to arrive within 24 hours) and let us do the rest.

Both the account and central buckets are created in the same AWS region. Neither have public access permitted. Athena (and so CCF) access the CUR data in the central bucket with a role and the monitored accounts also use a role to write to the central bucket. We partition the CUR data in the central bucket by using a unique identifier per account as the CUR report destination prefix.

Cost and usage reports with AWS include a CloudFormation template to deploy the necessary glue (using AWS Glue) to get Athena working. This template assumes the CUR report data is held in the home account bucket. One day I’d like to explore creating a blanket config to pull CUR data from multiple accounts into a single Athena table, but for now I’m just manually updating the AWS generated template to create unique table names and allow access to the central bucket instead.

I have only tested this with Jisc Cloud Solutions development and demo accounts so far (neither of which have particularly high energy usage) but so far so good.

What next?

Up until now I’ve just been working to get Cloud Carbon Footprint to run against a single account. I think it is capable of working with multiple accounts and multiple clouds. I want to explore this further, initially using the same UI to compare different AWS accounts. Next perhaps try and integrate some Azure accounts too.

Once we are comfortable with this approach we could drop it all and use the payer account cost and usage reports instead too. I didn’t want to lead with that as I’m trying to avoid scaring people with payer-level data and potential for data sharing (showing the CCF dashboard with accounts next to each other for example)

I’m running CCF locally on my laptop. Ideally we’d have that running in AWS (probably in ECS with Fargate) – this would keep all the data and processing inside of our AWS account.

Finally, if this all goes well and the data proves to be useful, it would be good to work with our members to use this tool to help monitor and reduce their cloud energy use (and likely then in turn reduce cost).